AI/ML powered features

GitLab is creating AI-assisted features across our DevSecOps platform. These features aim to help increase velocity and solve key pain points across the software development lifecycle.

Enable AI/ML features

Introduced in GitLab 16.0 and actively being rolled out.

Prerequisites:

- You must have the Owner role for the group.

To enable AI/ML features for a top-level group:

- Enable Experiment features.

- Enable third-party AI features (enabled by default). To disable AI features powered by third-party APIs, clear this setting.

These settings work together so you can have a mix of both experimental and third-party AI features.

Generally Available AI features

When a feature is Generally Available, it does not require Experiment features to be enabled. Some of these features might require third-party AI features to be enabled.

The following feature is Generally Available:

Beta AI features

Beta features do not require Experiment features to be enabled.

The following features are in Beta:

Experiment AI features

Experiment AI features require Experiment features to be enabled as well as third-party AI services to be enabled.

The following features are in Experiment:

- Fill in merge request templates

- Summarize merge request changes

- Summarize my merge request review

- Suggested merge or squash commit message

- Generate suggested tests in merge requests

The rest of the features described on this page are also in the Experiment phase.

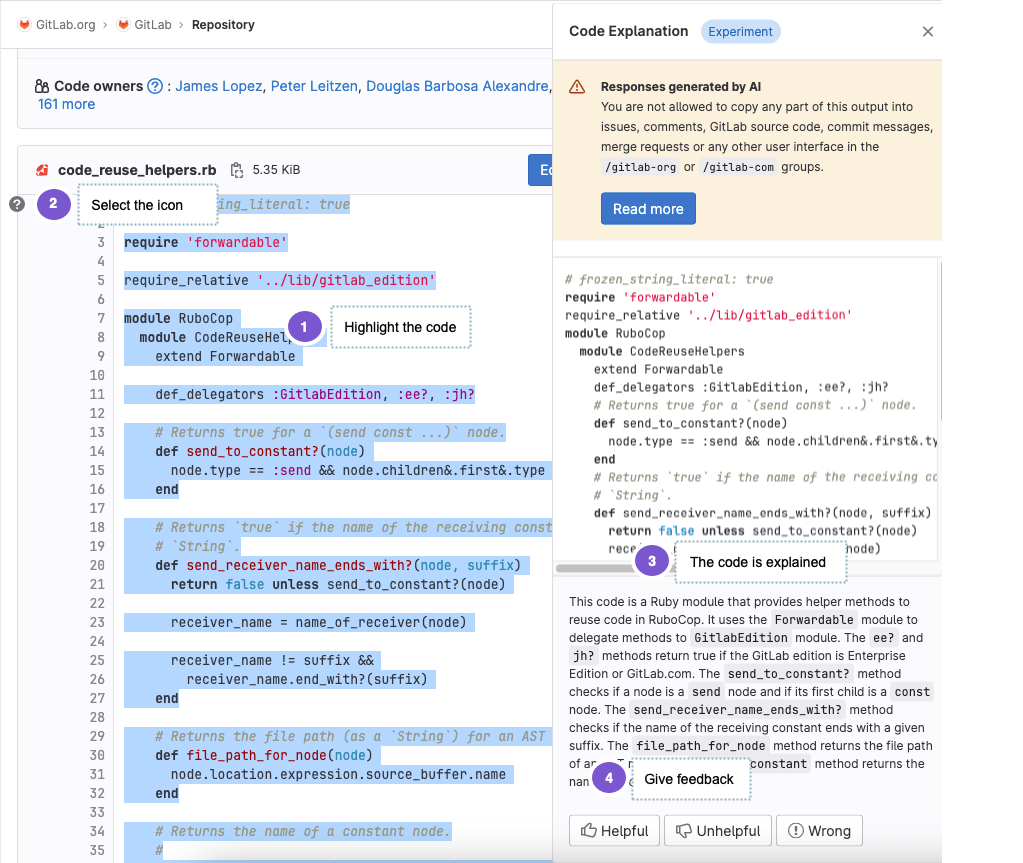

Explain Selected Code in the Web UI (ULTIMATE SAAS)

Introduced in GitLab 15.11 as an Experiment on GitLab.com.

This AI feature is an Experiment on GitLab.com that is powered by Google's Codey for Code Chat (codechat-bison).

GitLab can help you get up to speed faster if you:

- Spend a lot of time trying to understand pieces of code that others have created, or

- Struggle to understand code written in a language that you are not familiar with.

By using a large language model, GitLab can explain the code in natural language.

Prerequisites:

Additional prerequisites beyond the two above.

- The project must be on GitLab.com.

- You must have the GitLab Ultimate subscription tier.

- You must be a member of the project with sufficient permissions to view the repository.

To explain your code:

- On the left sidebar, at the top, select Search GitLab ({search}) to find your project.

- Select any file in your project that contains code.

- On the file, select the lines that you want to have explained.

- On the left side, select the question mark ({question}). You might have to scroll to the first line of your selection to view it. This sends the selected code, together with a prompt, to provide an explanation to the large language model.

- A drawer is displayed on the right side of the page. Wait a moment for the explanation to be generated.

- Provide feedback about how satisfied you are with the explanation, so we can improve the results.

You can also have code explained in the context of a merge request. To explain code in a merge request:

-

On the left sidebar, at the top, select Search GitLab ({search}) to find your project.

-

On the left sidebar, select Code > Merge requests, then select your merge request.

-

On the secondary menu, select Changes.

-

On the file you would like explained, select the three dots ({ellipsis_v}) and select View File @ $SHA.

A separate browser tab opens and shows the full file with the latest changes.

-

On the new tab, select the lines that you want to have explained.

-

On the left side, select the question mark ({question}). You might have to scroll to the first line of your selection to view it. This sends the selected code, together with a prompt, to provide an explanation to the large language model.

-

A drawer is displayed on the right side of the page. Wait a moment for the explanation to be generated.

-

Provide feedback about how satisfied you are with the explanation, so we can improve the results.

We cannot guarantee that the large language model produces results that are correct. Use the explanation with caution.

GitLab Duo Chat (ULTIMATE SAAS)

Introduced in GitLab 16.0 as an Experiment.

This feature is an Experiment on GitLab.com. It requires the group-level third-party AI features setting to be enabled.

GitLab Duo Chat is powered by Anthropic's Claude-2.0 and Claude-instant-1.1 large language models and OpenAI's text-embedding-ada-002 embeddings. The LLMs are employed to analyze user questions to collect appropriate context data from the user's project, and to generate responses. In some cases, embeddings are used to embed user questions and find relevant content in GitLab documentation to share with the LLMs to generate an answer.

You can get AI generated support from GitLab Duo Chat about the following topics:

- How to use GitLab.

- Questions about an issue.

- Summarizing an issue.

Example questions you might ask:

What is a fork?How to reset my passwordSummarize the issue <link to your issue>Summarize the description of the current issue

The examples above all use data from either the issue or the GitLab documentation. However, you can also ask to generate code, CI/CD configurations, or to explain code. For example:

Write a hello world function in RubyWrite a tic tac toe game in JavaScriptWrite a .gitlab-ci.yml file to test and build a rails applicationExplain the following code: def sum(a, b) a + b end

You can also ask follow-up questions.

This is an experimental feature and we're continuously extending the capabilities and reliability of the chat.

- In the lower-left corner, select the Help icon. The new left sidebar must be enabled.

- Select Ask in GitLab Duo Chat. A drawer opens on the right side of your screen.

- Enter your question in the chat input box and press Enter or select Send. It may take a few seconds for the interactive AI chat to produce an answer.

- You can ask a follow-up question.

- If you want to ask a new question unrelated to the previous conversation, you may receive better answers if you clear the context by typing

/resetinto the input box and selecting Send.

To give feedback about a specific response, use the feedback buttons in the response message. Or, you can add a comment in the feedback issue.

NOTE: Only the last 50 messages are retained in the chat history. The chat history expires 3 days after last use.

Summarize issue discussions (ULTIMATE SAAS)

Introduced in GitLab 16.0 as an Experiment.

This feature is an Experiment on GitLab.com that is powered by OpenAI's GPT-3. It requires the group-level third-party AI features setting to be enabled.

You can generate a summary of discussions on an issue:

- In an issue, scroll to the Activity section.

- Select View summary.

The comments in the issue are summarized in as many as 10 list items. The summary is displayed only for you.

Provide feedback on this experimental feature in issue 407779.

Data usage: When you use this feature, the text of public comments on the issue are sent to the large language model referenced above.

Show deployment frequency forecast (ULTIMATE SAAS)

Introduced in GitLab 16.2 as an Experiment.

This feature is an Experiment on GitLab.com.

In CI/CD Analytics, you can view a forecast of deployment frequency:

- On the left sidebar, at the top, select Search GitLab ({search}) to find your project.

- Select Analyze > CI/CD analytics.

- Select the Deployment frequency tab.

- Turn on the Show forecast toggle.

- On the confirmation dialog, select Accept testing terms.

The forecast is displayed as a dotted line on the chart. Data is forecasted for a duration that is half of the selected date range. For example, if you select a 30-day range, a forecast for the following 15 days is displayed.

Provide feedback on this experimental feature in issue 416833.

Generate issue descriptions (ULTIMATE SAAS)

Introduced in GitLab 16.3 as an Experiment.

This feature is an Experiment on GitLab.com that is powered by OpenAI's GPT-3. It requires the group-level third-party AI features setting to be enabled.

You can generate the description for an issue from a short summary.

- Create a new issue.

- Above the Description field, select AI actions > Generate issue description.

- Write a short description and select Submit.

The issue description is replaced with AI-generated text.

Provide feedback on this experimental feature in issue 409844.

Data usage: When you use this feature, the text you enter is sent to the large language model referenced above.

Data Usage

GitLab AI features leverage generative AI to help increase velocity and aim to help make you more productive. Each feature operates independently of other features and is not required for other features to function.

Progressive enhancement

These features are designed as a progressive enhancement to existing GitLab features across our DevSecOps platform. They are designed to fail gracefully and should not prevent the core functionality of the underlying feature. You should note each feature is subject to its expected functionality as defined by the relevant feature support policy.

Stability and performance

These features are in a variety of feature support levels. Due to the nature of these features, there may be high demand for usage which may cause degraded performance or unexpected downtime of the feature. We have built these features to gracefully degrade and have controls in place to allow us to mitigate abuse or misuse. GitLab may disable beta and experimental features for any or all customers at any time at our discretion.

Third party services

Data privacy

Some AI features require the use of third-party AI services models and APIs from: Google AI and OpenAI. The processing of any personal data is in accordance with our Privacy Statement. You may also visit the Sub-Processors page to see the list of our Sub-Processors that we use to provide these features.

Group owners can control which top-level groups have access to third-party AI features by using the group level third-party AI features setting.

Model accuracy and quality

Generative AI may produce unexpected results that may be:

- Low-quality

- Incoherent

- Incomplete

- Produce failed pipelines

- Insecure code

- Offensive or insensitive

GitLab is actively iterating on all our AI-assisted capabilities to improve the quality of the generated content. We improve the quality through prompt engineering, evaluating new AI/ML models to power these features, and through novel heuristics built into these features directly.